What is pre-trained Models ???

A pre-trained model is a machine learning (ML) model that has been trained on a large dataset and can be fine-tuned for a specific task. as defined by encord.

Or

A pretrained AI model is a deep learning model that’s trained on large datasets to accomplish a specific task, and it can be used as is or customized to suit application requirements across multiple industries.

as defined by nvidia blog

A pre-trained AI model is a deep learning model, which made by comparing how neural work on human brain, which used to find patterns in data or make prediction based on given data.

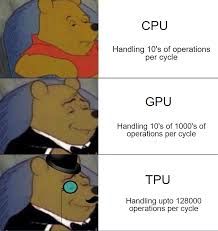

Recently after improve in hardware and introducing of very powerful processor (CPU (Central Processing Unit), GPU (Graphics Processing Unit), and TPU (Tensor Processing Unit) )

CPU: General-purpose processing.

GPU: Parallel processing, primarily for graphics and scientific computing.

TPU: Specialized processing for machine learning tasks.

The TPU is a type of processor developed by Google specifically for accelerating machine learning workloads. It can be used through Google Clouds service. TPU can be most useful over all other when it come neural/deep learning because It is optimized for performing tensor operations, which are the backbone of many machine learning algorithms, especially those involving neural networks.

even though GPU can be useful but thats another day lesson...

Lets continue with pre-trained models

WHY PRE-TRAINED MODELS ??

Pre-trained models can be useful for developers and researchers because they can save time and resources, and can be just as effective as custom models. In trainings model there are different problem we face first datasets, resource and last time let take a simple example of BERT model

| Resource | Model | Time Used to Train |

|---|---|---|

| TPU (16 chips) | BERT-Base | 4 days |

| TPU (64 chips) | BERT-Large | 4 days |

you can see how long it take to train model and how much of resource used it hard to stand this math, For small developers and researchers so pre trained model was released in order to save time and resources used in training models from scratch. Now big pre trained models like gpt-2, BERT, gemma2, ELMo, Transformer-XL, and RoBERTa,VGG, ResNet, and Inception have been released and now researchers can save steps and start building from certain point not from scratch so what we have is a models taught everything at generally like gpt-2, and BERT, and we come with specific datasets and fine-tune the model using our datasets so it can be useful in specific area

DISADVANTAGE OF PRE-TRAINED MODEL

Domain-Specific Features: Pretrained models are often trained on large and diverse datasets, but they may not capture domain-specific features relevant to your specific task

Overfitting: If the pretrained model is very large and your dataset is relatively small, there's a risk of overfitting. The model may memorize the training data rather than learning generalizable patterns.

Lack of Transparency: Pretrained models are often black boxes, making it challenging to understand how they make predictions. This lack of transparency can be problematic in applications where interpretability and explainability are important.

Pre-existing biases in pre-trained models can arise from the data they were originally trained on. Most pre-trained models, like BERT or GPT, were trained primarily on large English datasets. This can create several issues, especially when trying to use these models for languages like Swahili.

Prepared By Alfred Baraka

Computer Science Student

Data Science Enthusiast